This week I explored the Runway interface and played with several models.

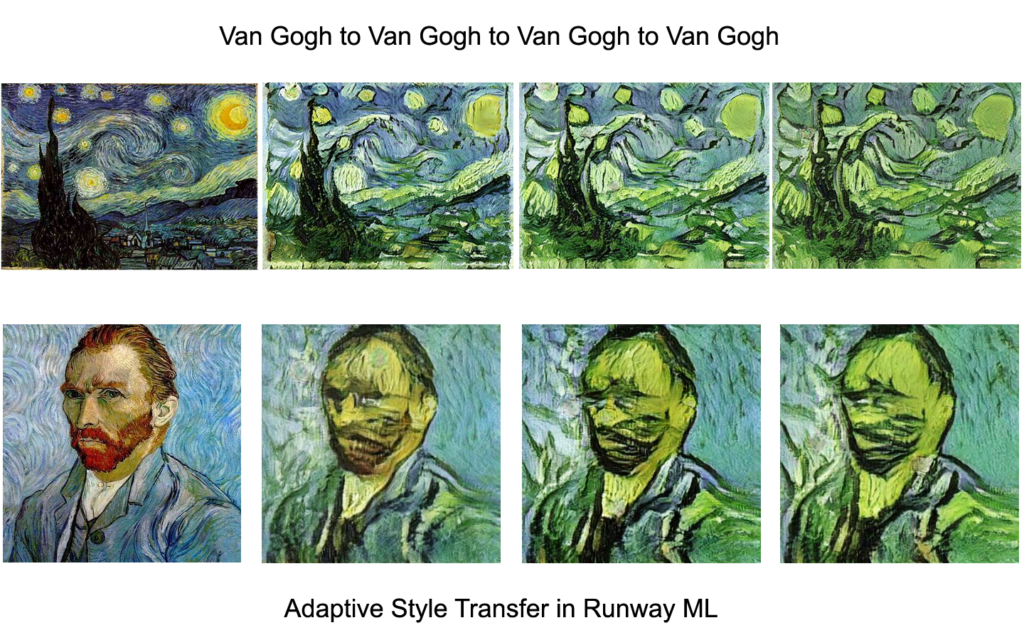

Adaptive Style Transfer was designed, and is mostly used, to apply a painting style to a photo, but I was interested to see what happens when it is applied to paintings, specifically when it is applied to itself.

This yields the paradoxical result of the model trying to make an actual Van Gogh painting more Van-Gogh-like. It would be interesting to run this process repeatedly and see what we get after 100 runs. I hope to find out how to do that by linking Runway to a code platform like p5.js.

I’m interpreting these results in relation to the concept of content loss that is discussed in the research paper related to the model. Applying style transfer to an image is actually taking content away from it, to create a more abstract image. This might be an effective approach for an algorithm, but it doesn’t promote any kind of understanding of how artists see the world and what goes into the creative process.

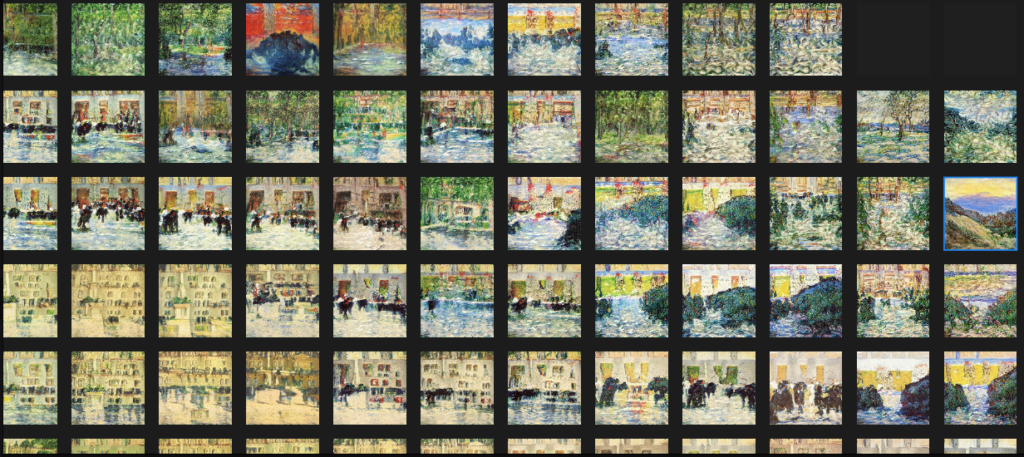

I found it interesting to cruise through the different latent spaces of the image generation models, tracking how the content shifts as the different vectors change their value, and finding the quirks and idiosyncratic character of the content. I would like to be able to travel through these spaces by controlling the vectors directly, either with sliders or with keyframes. I wonder if that’s something that could be implemented into the Runway UI. I didn’t see a clear way to connect the input of a model to an external platform.

As I was not satisfied with how Runway let me browse through the image space, I eventually found a link from the StyleGan Github to a Google Drive folder containing thousands of images.

It was great to be able to browse quickly and find images that were interesting to me – I centered on those images that contained artifacts, that constituted a failure of the model to generate a realistic image. These “bad” results are, I believe, part of what is referred to as the neural aesthetic, part of AI’s current appeal to artists. But as researchers continue to develop and perfect their models, will this these “artifacts” ultimately be eliminated?

Finally I ran a few selected images through the im2txt model that adds captions to photos.

Google Slides presentation

My process was manual (uploading a folder of images to the model, downloading a csv with text captions, then uploading everything to Google Slides). I look forward to learning how to automate that process.

Posted in Spring '20 - Intro to Synthetic Media |